Let’s get you started on product experimentation. This guide is written from the lens of a Growth Product Manager. But the principles apply to any person in charge of Product Growth.

Table of Contents:

Where to start

What and how to prioritize

Keys skills for a person owning Product Growth

Pitfalls to avoid during experimentation

Analyzing results

Sharing the results

1. Where to start

Part 1:

Imagine this: You've been hired to do Growth but lack the resources to do any experimentation. Founders/leaders are skeptical of you testing things and blocking crucial engineering resources. You are frustrated, and before you know it, you start looking for a job elsewhere.

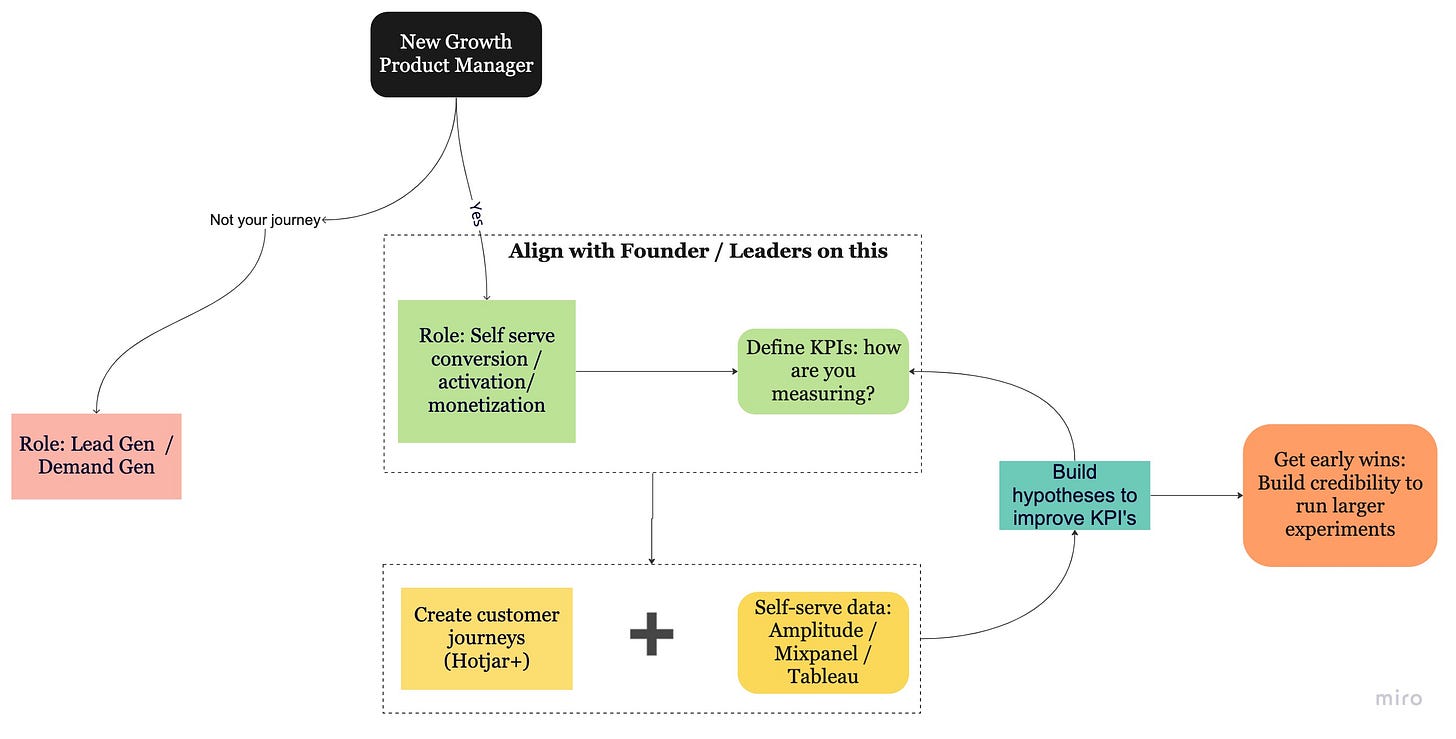

If you are starting in a new Growth position, this is what you need to get right to avoid the above situation :

Alignment on your role

I cannot stress enough how important this alignment is. The thing is, startups only know growth = more customers.

A startup leader might think about growth in terms of either acquisition/ lead generation/ adoption/ more recurring revenue, etc. It is your job to clarify what their perception of this role is.

As a Growth PM, your focus should be driving self-serve conversion, adoption, and maximizing revenue. You are not directly responsible for lead generation.Defining product KPIs

The next step is to align on how the product defines certain KPIs such as activation, adoption, engagement, etc.

Do not assume that the company has a clear definition of these indicators. You'll be surprised to see how few companies have data-driven and company-wide accepted definitions of these KPIs.

A big part of your role is to collaborate and align on these definitions with stakeholders and make them widely accepted.

These KPIs will be what you optimize when you run growth experiments.The customer journey

As basic as this sounds, I've observed how few PMs use Hotjar to outline the customer journey. Do you know the drop-off points in your customer's journey? If not, what are you optimizing for? You might have little time initially to get the entire customer journey right, but you can start with your goal for the quarter and work backward. Use the Amplitude chrome extension to chart product data and find the drop-off points in the customer journey.Self-serve data

It's common for early-stage companies to have limited data resources. You may not have the luxury of a dedicated analyst. Learn to self-serve data in Amplitude / Mixpanel, request cohort data in tableau dashboards and funnel charts. These are good starting points for building some hypotheses for early tests.Getting small wins

Also, did I mention you do not have all the time in the world to do the above? A big mistake you can make is waiting for clarity and more direction or a high-level alignment. No one is coming. This is where you need to use that customer journey you created in step 3 and start executing on some low-hanging fruits. Make those minor tweaks, show results, build credibility, and then move on to the larger bets.

Part 2:

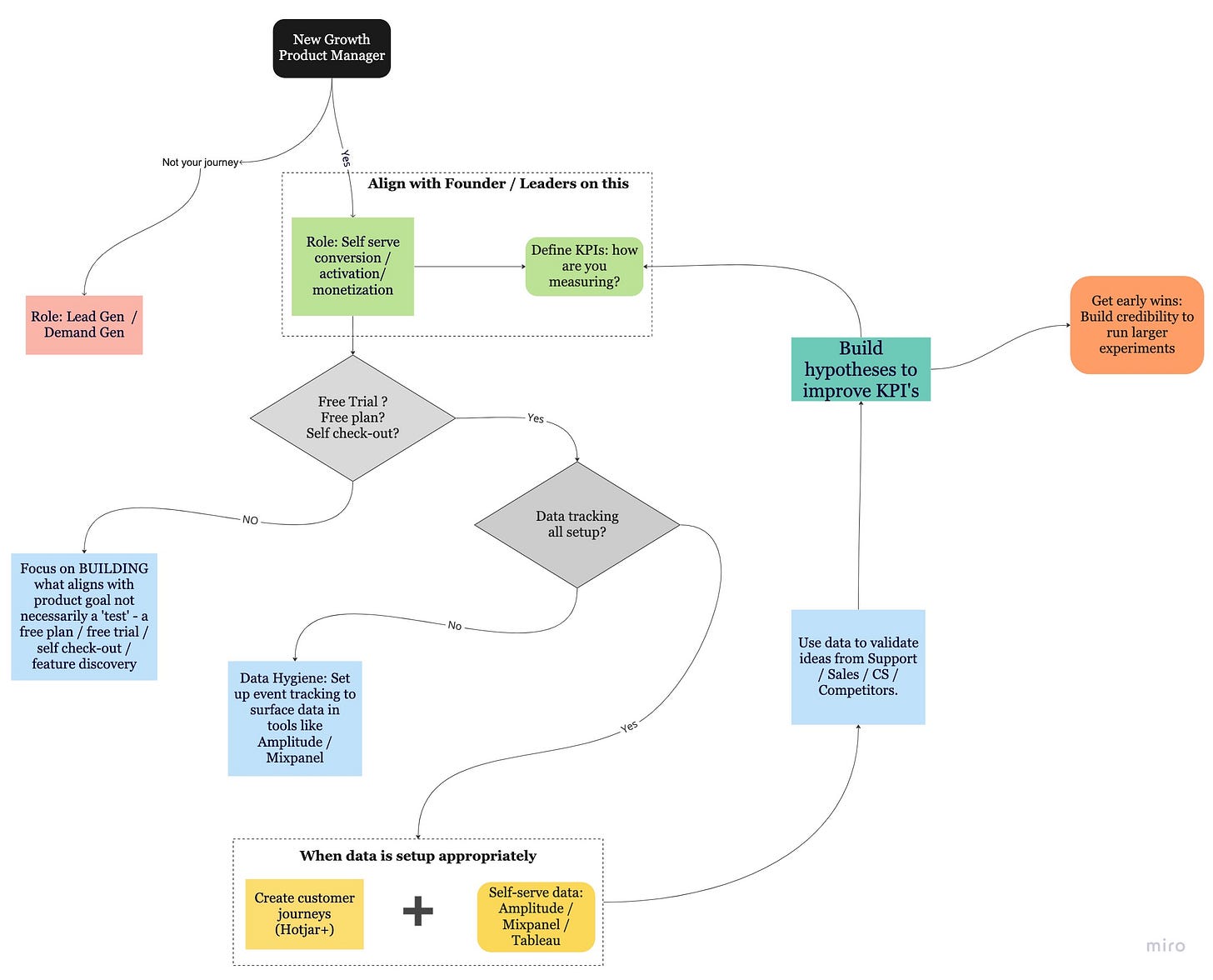

Now imagine this: you're entrusted to do PLG, and you assume a big part of your role is to run multiple experiments, be best buddies with your data analyst, and hack away at things. You are going to increase the conversion, adoption, trials, etc. But what if the product doesn't have a free plan or a trial? 😟 Oh, not the role you signed up for?

Here's what you can do if you find yourself in this situation.

Launch. Don't test.

Being product-led doesn't always mean your product has a free trial or a free plan. Although it is arguably the best way to experience a product, remember that you have limited resources. So if your product doesn't have a free trial or freemium, what's the best way to ungate the product experience? Create a video tour of your product and put it on the marketing site. If this improves people booking demos, that's a success! You can use this to validate that a free trial or freemium approach might work for your product and BUILD a free plan or trial.

Also, PLG, at its core, is being user-led. So if your product has a free sign-up, but the checkout experience is terrible or customers only adopt one feature because the in-app discovery of other features is bad… maybe that's what you want to fix first. This is like building any other product feature and might not need a test. So, a Growth PM doesn't always have to run "experiments."Setting up analytics.

You could have a free trial and a free plan, and customers can self-checkout. But you are not tracking any events. Because at the time the product was built, data was overhead and not necessary. But now, you don't have any data being tracked to gather insights and measure any KPIs. As a Growth PM, you are responsible for building the necessary tracking of events in the customer journey - sign-up, FTUX, and checkout flow. Be a champion of good data tracking, measuring the success of critical flows, improving those, and verifying those improvements worked.Let's get testing.

Maybe you are the lucky one. Everything above is set, and you need to optimize the sign-up flow and increase conversion and other growth metrics. So, where do you look for ideas to test? Your safe bet is looking at competitors who have a similar model. Try to correlate things that they do well in the area of the product you want to improve and the experience downstream. Build hypotheses based on your product data and launch some quick tests. Ideas can also come from internal teams - Support / Sales / CS - build strong relations with these teams so that you know the areas that can be improved.

2. What and how to prioritize

You are all set to experiment now but how do you prioritize which experiments to run?

Well, it depends on what metric you are trying to optimize for. I still get blank stares when I say this, so let’s dive deep into what this means.

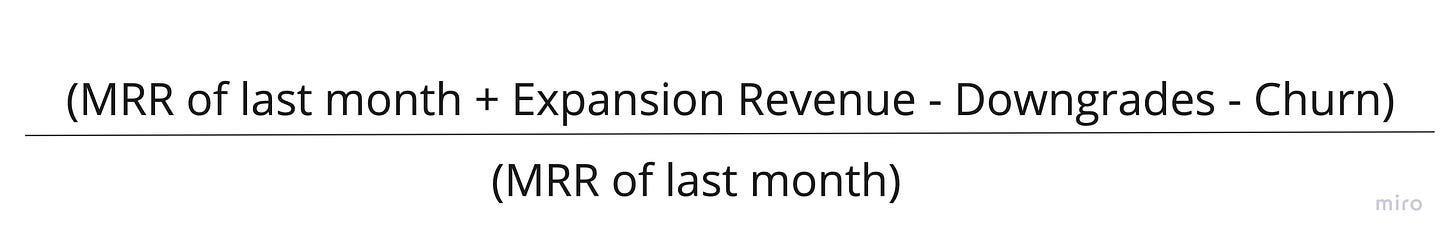

As Product Growth, you are in a unique situation where you primarily try to optimize for the business metrics by optimizing the value delivered to the user in-app. In SaaS businesses, you are often trying to optimize the overarching goal of increasing Net Revenue Retention (NRR).

𝗡𝗥𝗥 is defined as:

Another very commonly used term is increasing Average Revenue per Account or 𝗔𝗥𝗣𝗔.

In B2B SaaS businesses, these definitions basically target increasing monthly recurring revenue, expansion revenue, and reducing downgrades and churn.

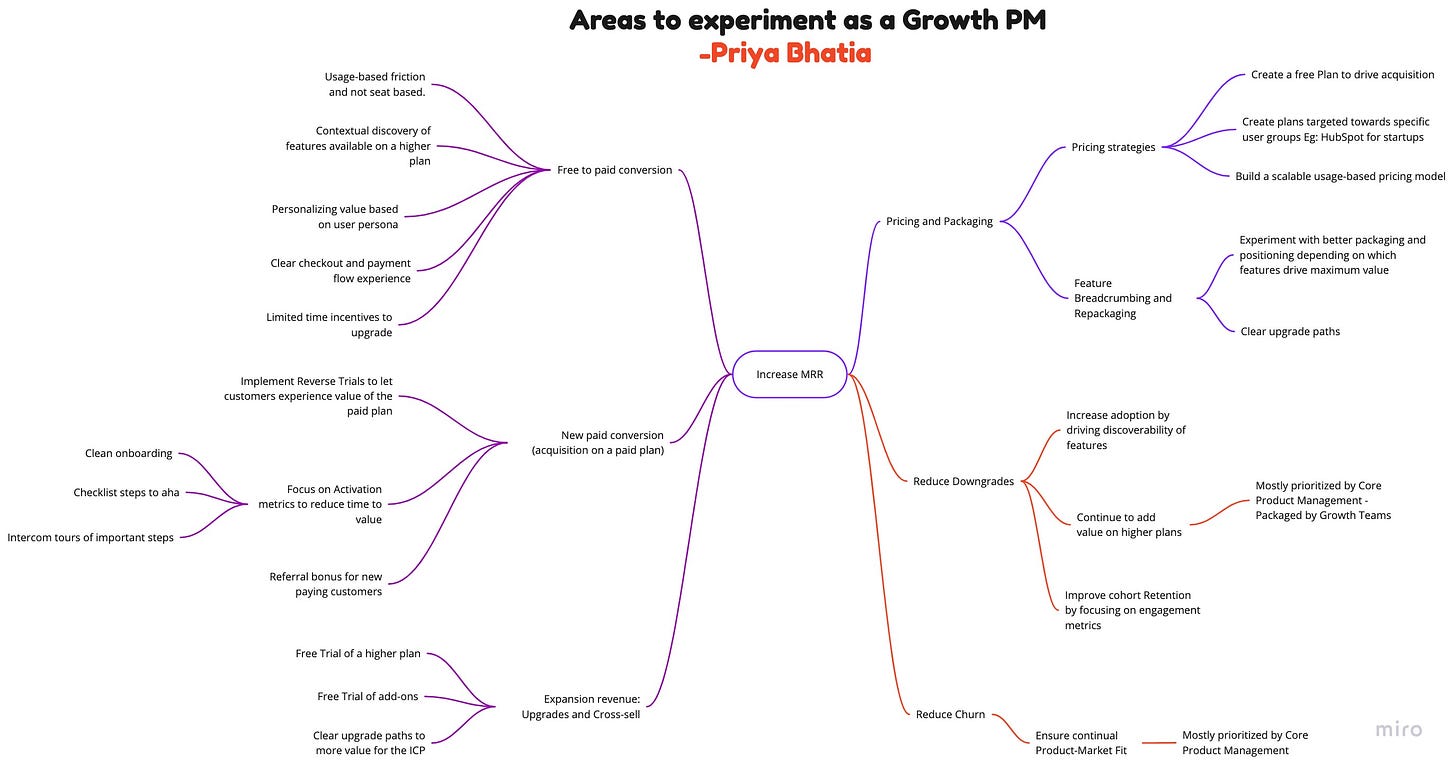

There are numerous ways you can increase MRR. What you prioritize depends on what metrics are a business priority or those that could be performing worse.

𝗠𝗥𝗥 can be increased by:

1. Increasing free to paid conversion

2. Increasing more paid conversion

3. Increasing expansion revenue (upsells and cross-sells)

4. Better pricing models and packaging

5. Reducing downgrades

6. Reducing churn

When times are good, companies generally focus more on price increases and acquisition, whereas when times are conservative, companies focus more on maintaining customer retention and driving adoption.

Once you’ve prioritized a 𝗴𝗼𝗮𝗹 to improve, you can explore multiple tactics or bets, which may or may not be an experiment. At any given point, you want to implement a portfolio of bets to diversify risk and maximize return in the same period. Always have a larger bet peppered with multiple smaller experiments.

For example, I’ve listed some 𝘁𝗮𝗰𝘁𝗶𝗰𝘀 that can be explored under each area, but there can be many more depending on your product and the goal. In some cases, a deeper impact assessment or revenue forecast might be valuable depending on the size of the bet and the revenue target. This is just a starting point.

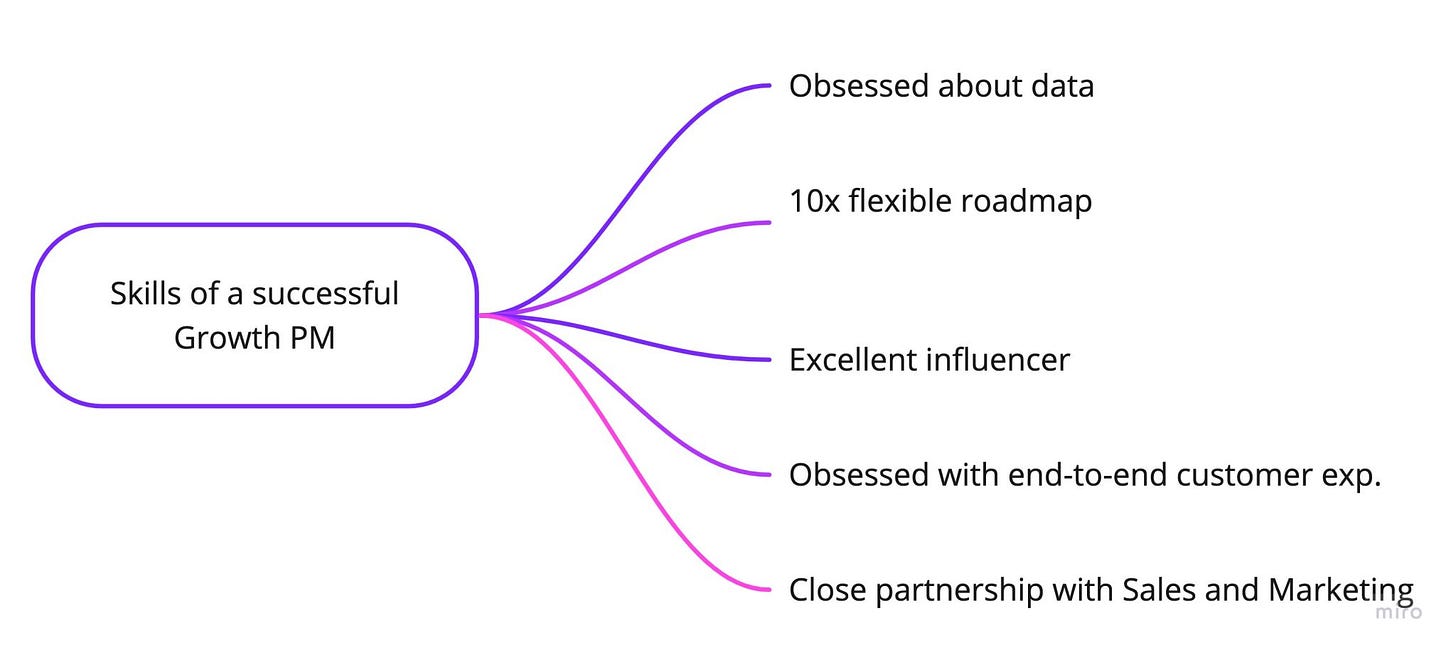

3. Key skills for a person owning Product Growth

Some skills that will help you quickly level up as a Growth professional:

Being obsessed with data

Growth PMs are not only data-driven (read data-informed) when outlining hypotheses for experiments and success metrics, but they are also curious about diving into data dashboards and metrics. A good Growth PM analyzes various funnel metrics, cohort activation, and retention charts, track experiment results, etc., daily.

Being curious about these data points reveals the biggest opportunities to drive the following experiment.Having a flexible roadmap

A Growth PM’s roadmap needs to be 10x flexible such that you are working on the highest impact opportunity. Experimenting is not cheap; hence, you must adjust rapidly as you learn and uncover new data points. You also cannot spend months building an experiment; instead, you need minimal viable tests.Excellent influencer

You cannot grow in a vacuum. To make product-led growth an org-wide priority, a Growth PM needs to share the results of their experiments continually. Eventually, you want to create a repository of learnings for other functions to learn from. You need to be a product growth evangelist to realize the actual impact of your work.Being obsessed with end-to-end customer experience:

Growth PMs obsess about customer problems in FTUX and how customers find the value they were promised. You also need to care about the overall customer journey to help users activate, convert and retain, not just a part of the product.Partnering with Sales and Marketing

A Growth PM is typically in charge of improving the inbound customer funnel. Growth PMs, therefore, should regularly interface with the Revenue and Marketing side of the org to:

a. uncover areas of friction in customer sign-up and conversion

b. to run manual experiments - this helps build validation without writing a line of code

4. Pitfalls to avoid during experimentation

Experiments should be well thought out as they aren't cheap to run - development resources, iterations, and analysis are expensive!

Here are some common pitfalls to avoid:

Did you have a clearly defined hypothesis?

Clearly defining the hypothesis is essential for methodical problem-solving, which leads to clear learning.Do you have a clear way of measuring and analyzing the success metrics?

Metrics that will determine the success or failure of the test need to be outlined before running the tests and not after.Did you try to dog-food and use your own product to see if it even makes sense and whether you will actually learn something?

Are you falling for the sunk cost fallacy?

Just because you have put in the work, you don't want to YOLO it.Did you analyze the results correctly before moving on to the v2 of the experiment?

You should be able to isolate the impact of your tests before you decide on the next steps.Are you taking a portfolio approach to running experiments to drive impact?

Don't aim for a few massive tests; rather, have 2-3 major tests and a few smaller ones to create impact.Are you being careful about what tests can be run simultaneously?

Ensure that the experiment is not going live when your test might influence the results of another.

5. Analyzing results

Don’t move on to the next experiment before analyzing the outcomes of the previous experiments. Here’s what you need to analyze the results:

Was it a success or a failure?

Once the experiment has ended, determine whether it moved the metric at all or not.

While <25% of experiments succeed, the rest are not all failures.

You fail only when you haven't learned anything about the metrics you were targeting.Of course, the Why!

Most important step is to figure out the potential reasons of success / failure.

Was it the cohort size, recent product launch or length of the exp.

- was it a specific input metric or a combination of all those metrics?Measuring correctly

Did you use the correct framework to measure the results?

Your data analyst should guide you but one of the following should be used where appropriate:

• Statistical significance

• Continuous monitoring

• Sequential sampling

• Dynamic decision boundariesProduct levers

An experiment might be a success or a failure, but it should help you determine some of the levers that create a positive or negative impact on your metrics.

Modulating these levers generally becomes the basis of your following experiments.Accuracy of results

An experiment's context determines its accuracy.

Note how far or close you are to the prediction of the success metric.

If you are far off from the prediction, that means you don't understand certain variables in the model and might need to revaluate what you think your product levers are!

6. Sharing the results

Did you get the buy-in to run experiments, execute them, and expect no one will care about the results? If you thought you could run experiments in a bubble and not talk about the outcomes, you would probably fail at your role.

Here's why you need to care a little more:

𝗗𝗶𝘀𝗰𝗼𝘃𝗲𝗿𝘆 𝗼𝗳 𝗻𝗲𝘄 𝗱𝗮𝘁𝗮 𝗽𝗼𝗶𝗻𝘁𝘀:

The whole point of running experiments is getting closer to the truth and user psychology - Uncovering the data points you may not have today. If you ran an experiment and did not share the results across the org, you are not internally promoting any new learning about the user. This means the whole idea of learning quickly through experimentation did not happen. Your job is to create an internal repository of learnings that can be used as a reference to run future tests.𝗚𝗲𝘁𝘁𝗶𝗻𝗴 𝘁𝗵𝗲 𝗯𝘂𝘆-𝗶𝗻 𝘁𝗼 𝗶𝗻𝗻𝗼𝘃𝗮𝘁𝗲:

As a growth person in charge of experimentation, you start with optimizing the existing flows. If you want to mature into a role to start taking some larger bets that might be risky but let you innovate on a new loop or a pricing model, you'll have to build up credibility across the organization. - I'm talking Senior leadership, Marketing, Sales, and other teams. This comes only by talking and sharing more about the experiments you've run.𝗬𝗼𝘂𝗿 𝗶𝗱𝗲𝗮 𝗹𝗼𝗼𝗽:

You cannot generate unlimited ideas by working in a bubble. You need to create your flywheel of ideas that you can experiment on. Sharing the outcomes of your experiments is what keeps internal teams excited. When you are seen as the go-to person who runs experiments to collect insights on customers, people start reaching out to you about customer pain points or improving an area of a product that's not optimal, or some crazy idea that cannot be put on the roadmap without some validation, etc.

𝗦𝗵𝗮𝗿𝗶𝗻𝗴 𝗿𝗲𝘀𝘂𝗹𝘁𝘀:

There's no one way I know is the best yet! So, I suggest you default to sharing it across the board. As many slack channels as possible - new launches, results channel, specific channels such as product/sales/marketing if the learning can help any of these teams, talking about it in your cross-functional team meetings, etc. Maintain a doc or a google sheet of all experiments and their outcome. Just make sure the results are not tucked away in an AB test doc or a data Jira ticket.

I hope this guide helps you get started on your experimentation journey.

Read it, share it and drop comments about what you’d like to learn more.

When a company does not have the capacity to do proper experiments (A/B test) because of lack of users to get statistical significance quickly for example, what are the alternatives you suggest?